Tommy (Xiuqi) Zhu

Ph.D. Student @ Northeastern University

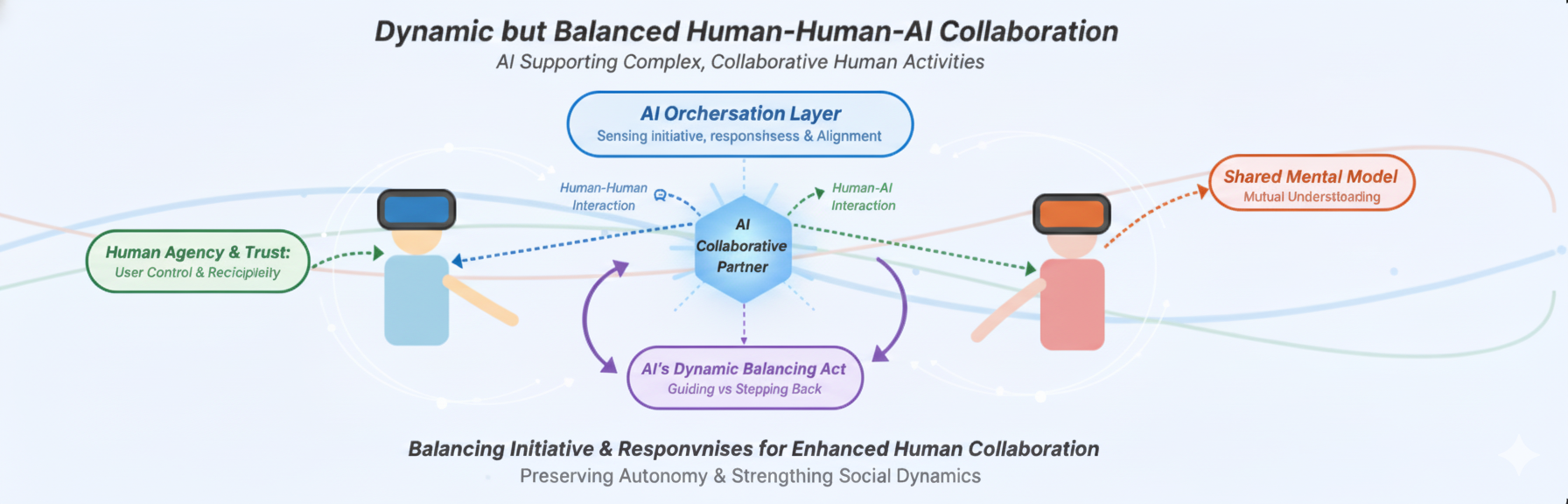

I am a third-year Ph.D. student in Interdisciplinary Media and Design @ Northeastern CAMD, advised by Prof. Eileen McGivney. My research lies at the intersection of Human-Computer Interaction, Extended Reality (XR), and AI. Specifically, I design and study AI-powered smart glasses that support complex, collaborative, and everyday activities—enhancing human–human collaboration without disrupting natural social interactions. I have also conducted side research on VR for simulation training, accessible technology for blind and low-vision students, and tangible systems for creativity Previously, I interned as a UX Researcher at ByteDance Lark. I received my B.A. in Digital Media Arts from the Communication University of China.

Research Interests

My ongoing research focuses on integrating multimodal large language models (MLLMs) into XR to:

- Seamlessly interpret, understand, and respond to users' environments and needs in real-time, enabling XR to support complex, collaborative, and everyday multi-user tasks.

- Transform interactive educational content into dynamic, personalized, and memorable simulation-based learning experiences through AI-driven, adaptive instruction.

Publications

* denotes equal contribution. “Xiuqi Zhu” and “Xiuqi Tommy Zhu” refer to the same author.

Peer-reviewed Full Conference and Journal Publications

Understanding the Practice, Perception, and Challenge of Blind or Low Vision Students Learning through Accessible Technologies in Non-Inclusive ‘Blind College’

International Journal of Human Computer Interaction (2025)In developing and underdeveloped regions, many 'Blind Colleges' exclusively enroll individuals with Blindness or Vision Impairment (BLV) for higher education. While advancements in accessible technologies have facilitated BLV student integration into 'Integrated Colleges,' their implementation in 'Blind Colleges' remains uneven due to complex economic, social, and policy challenges. This study investigates the practices, perceptions, and challenges of BLV students using accessible technologies in a Chinese 'Blind College' through a two-part empirical approach. Our findings demonstrate that tactile and digital technologies enhance access to education but face significant integration barriers. We emphasize the critical role of early education in addressing capability gaps, BLV students' aspirations for more inclusive educational environments, and the systemic obstacles within existing frameworks. We advocate for leveraging accessible technologies to transition 'Blind Colleges' into 'Integrated Colleges,' offering actionable insights for policymakers, designers, and educators. Finally, we outline future research directions on accessible technology innovation and its implications for BLV education in resource-constrained settings.

Can You Move It?: The Design and Evaluation of Moving VR Shots in Sports Broadcast

IEEE International Symposium on Mixed and Augmented Reality (ISMAR 2023)Virtual Reality (VR) broadcasting has seen widespread adoption in major sports events, attributed to its ability to generate a sense of presence, curiosity, and excitement among viewers. However, we have noticed that still shots reveal a limitation in the movement of VR cameras and hinder the VR viewing experience in current VR sports broadcasts. This paper aims to bridge this gap by engaging in a quantitative user analysis to explore the design and impact of dynamic VR shots on viewing experiences. We conducted two user studies in a digital hockey game twin environment and asked participants to evaluate their viewing experience through two questionnaires. Our findings suggested that the viewing experiences demonstrated no notable disparity between still and moving shots for single clips. However, when considering entire events, moving shots improved the viewer’s immersive experience, with no notable increase in sickness compared to still shots. We further discuss the benefits of integrating moving shots into VR sports broadcasts and present a set of design considerations and potential improvements for future VR sports broadcasting.

Posters, Extended Abstracts, Workshop Papers and Technical Reports

Designing VR Simulation System for Clinical Communication Training with LLMs-Based Embodied Conversational Agents

Conference on Human Factors in Computing Systems (CHILBW 2025)VR simulation in Health Professions (HP) education demonstrates huge potential, but fixed learning content with little customization limits its application beyond lab environments. To address these limitations in the context of VR for patient communication training, we conducted a user-centered study involving semi-structured interviews with advanced HP students to understand their challenges in clinical communication training and perceptions of VR-based solutions. From this, we derived design insights emphasizing the importance of realistic scenarios, simple interactions, and unpredictable dialogues. Building on these insights, we developed the Virtual AI Patient Simulator (VAPS), a novel VR system powered by Large Language Models (LLMs) and Embodied Conversational Agents (ECAs), supporting dynamic and customizable patient interactions for immersive learning. We also provided an example of how clinical professors could use user-friendly design forms to create personalized scenarios that align with course objectives in VAPS and discuss future implications of integrating AI-driven technologies into VR education.

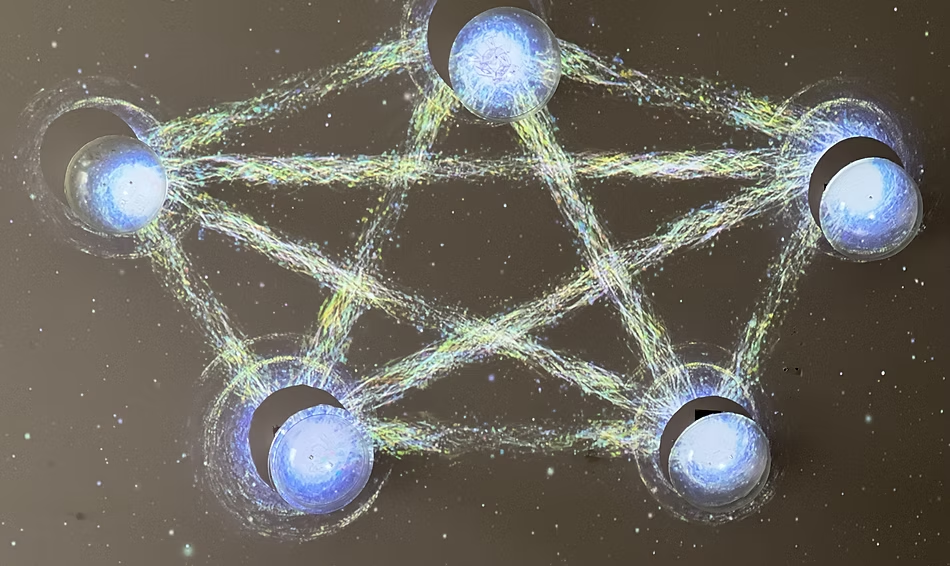

Co-Space: A Tangible System Supporting Social Attention and Social Behavioral Development through Embodied Play

Interaction Design Children (IDC 2023)Early impairments in social attention prevent children with autism spectrum disorder (ASD) from acquiring social information and disrupt their social behavior development. We present Co-Space, a tangible system that supports children with ASD aged 4-8 years in developing social attention and social behaviors through collaborative and embodied play in a classroom setting. Children can paint tangible hemispheres, insert them on a projection board, and then press or rotate the hemispheres independently or collaboratively to view dynamic audiovisual feedback. We leveraged the strengths of children with ASD (e.g., who may have visual strengths and prefer repetitive actions) by providing them with tangible hemispheres to engage their participation. We utilized codependent design and dynamic audiovisual cues to facilitate children’s social attention and encourage their collaboration and potential social behaviors. We demonstrated multiple ways to integrate the tangible system into classroom activities. We discuss the benefits of designing tangible systems for supporting social attention and social behaviors for children with ASD through play in the classroom.

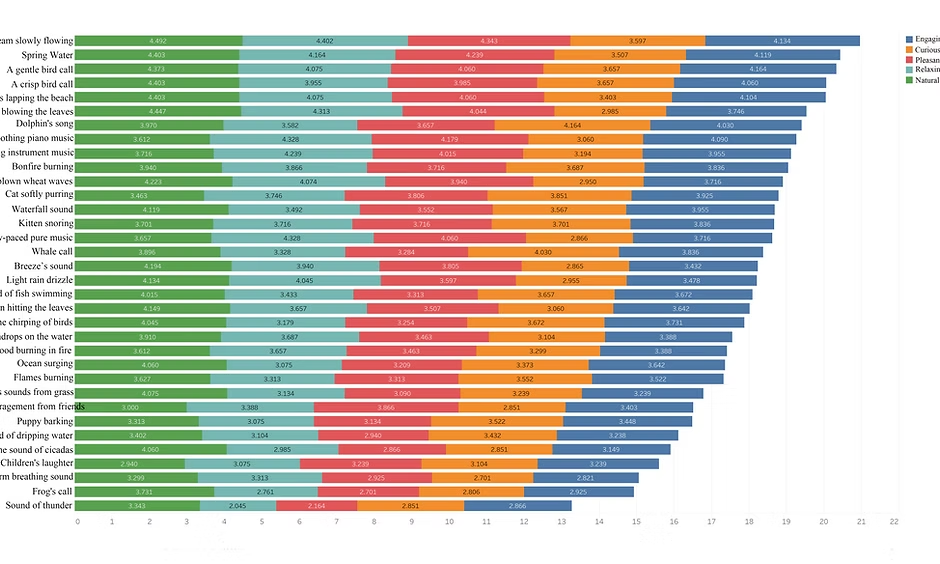

An Initial Attempt to Build a Natural Sounds Library based on Heuristic Evaluation

Human Computer Interaction International Poster 2022Attention restoration theory (ART) predicts that the natural environment can restore consumed attentional resources. Previous studies also found presenting natural scenes visually can also have such an effect but whether natural sounds may also have this effect has not been fully examined. In this study, we used an exploratory approach to build a library of natural sounds. We surveyed 204 people by asking them to name ten different types of ‘natural’ sounds and the ten types of ‘relaxing’ sounds. The collected more than 1,800 answers were then coded according to the source and the characteristics. Finally, twenty-one categories of sounds emerged from these responses. Among them, six categories were considered to be both relaxing and natural (e.g. birds’ songs). For other categories, they were only natural (e.g. thunder) or only relaxing (e.g. music). We discussed how to use this sound library in future studies.

Under-review Manuscripts and In Preparation

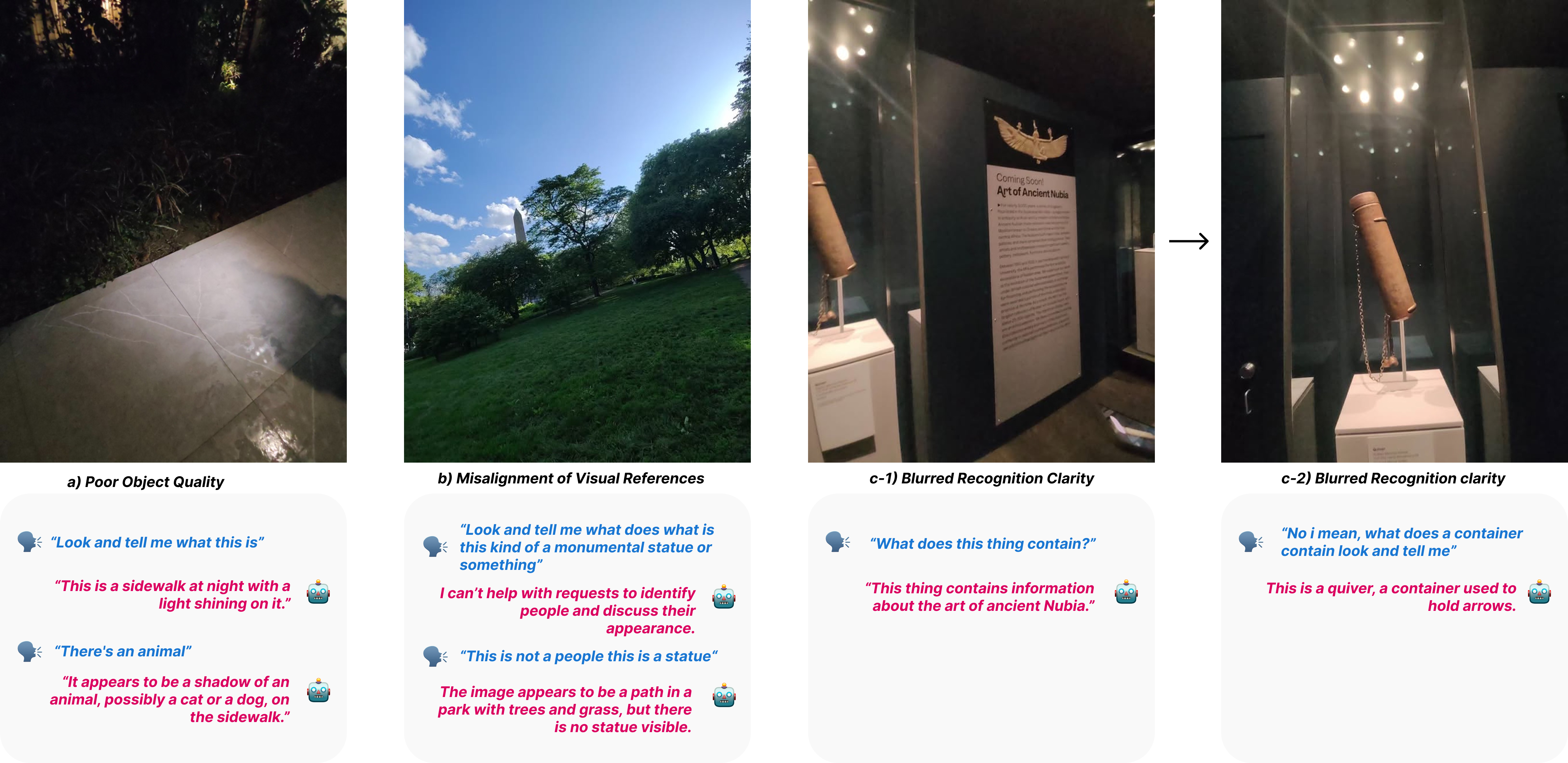

"Reimagining the Future of Smart Glasses Through Exploring the Conversational Successes and Breakdowns in Everyday Activities

In Submission, DIS 2026Smart glasses hold the potential to support everyday activities by combining continuous environmental sensing with voice-only interaction powered by large language models (LLMs). Understanding how conversational successes and breakdowns in everyday activities could better guide the design of future smart glasses. To investigate this, we conducted a two-phase study. First, a month- long collaborative autoethnography (n=2) identified situated patterns of successes and breakdowns with vision-based smart glasses. Building on these insights, we then engaged eight participants in multi-phase interviews to reflect on ideal roles and envision future interactions. Our findings reveal recurring breakdowns in intent recognition, perception, and the shared understanding of activity context. We propose design considerations for future smart glasses, such as situated in context, clear autonomy boundaries, and social integration. We argue that smart glasses should not be treated merely as interactional novelties, but as opportunities to rethink smart glasses as a newly built social ecosystem.

"It doesn't look real, but it did feel real.": Designing and Evaluating an LLM-Powered VR Simulation for Health Profession Education

In Submission, AIED 2026Clinical communication is essential for health professions (HP) students, yet simulation labs and prior VR systems often rely on rigid scripts that fail to capture the unpredictability of real patient encounters. We present VAPS, a Virtual AI Patient Simulator that integrates embodied conversational agents (ECAs) with large language models (LLMs) to support dynamic, voice-based interactions. We conducted a mixed-method evaluation with 40 HP students from five disciplines, examining usability, sense of agency, emotional engagement, and perceived realism. Results show that VAPS was usable, reduced nervousness, and was perceived as immersive and emotionally authentic. Students viewed VAPS as a supplement rather than a replacement for simulation labs and envisioned future extensions including diverse patient cases and interprofessional training. Our findings suggest that VR simulation should evolve from looking real toward fostering experiences that feel real, with conversational AI playing a central role in enabling more adaptive and authentic learning..

Reshaping Inclusive Interpersonal Dynamics through Smart Glasses in Mixed-Vision Social Activities

In Submission DIS 2026Meaningful social interaction is vital to well-being, yet Blind and Low Vision (BLV) individuals face persistent barriers when collaborating rating with sighted peers due to limited access to visual cues. While most wearable assistive technologies emphasize individual tasks, smart glasses introduce opportunities for real-time, contextual support in collaborative settings. To explore how smart glasses can affect the interpersonal dynamics and support inclusive experiences in mixed-vision groups, we developed a smart glasses–based system- tem CollabLens as a technology probe, and employed it in four mixed-vision workshops. Through mixed-method analysis, we found that smart glasses can meaningfully support inclusive collaboration and strengthen BLV participants’ autonomy in mixed-vision activities. However, while sighted participants viewed smart glasses as reducing their assistance burden and enhancing mutual understanding, BLV participants primarily valued smart glasses for independent task completion rather than social inclusion, due to persistent barriers such as technical unreliability, physical discomfort, and social embarrassment. We concluded by discussing and synthesizing challenges and opportunities for designing smart glasses that foster natural social dynamics in future inclusive settings.